Use cases

Wazuh is often used to meet compliance requirements (such PCI DSS or HIPAA) and configuration standards (CIS hardening guides). It is also popular among IaaS users (eg. Amazon AWS, Azure or Google cloud) where deploying a host-based IDS in the running instances can be combined with the analysis of the infrastructure events (pulled directly from the cloud provider API).

Here is a list of common use cases:

Signature-based log analysis

Automated log analysis and management accelerate threat detection. There are many cases where evidence of an attack can be found in the logs of your devices, systems and applications. Wazuh can be used to automatically aggregate and analyze log data.

The Wazuh agent running on the monitored host is usually the one in charge of reading operating system and application log messages, forwarding those to the Wazuh server where the analysis takes place. When no agent is deployed, the server can also receive data via syslog from network devices or applications.

Wazuh uses decoders to identify the source application of the log message and then analyzes the data using application specific rules. Here is an example of a rule used to detect SSH authentication failure events:

<rule id="5716" level="5">

<if_sid>5700</if_sid>

<match>^Failed|^error: PAM: Authentication</match>

<description>SSHD authentication failed.</description>

<group>authentication_failed,pci_dss_10.2.4,pci_dss_10.2.5,</group>

</rule>

Rules include a match field, used to define the pattern the rule is going to be looking for. It also has a level field that specifies the resulting alert priority.

The manager will generate an alert every time an event collected by one of the agents or via syslog matches a rule with a level higher than zero.

Here is an example found in /var/ossec/logs/alerts/alerts.json:

{

"agent": {

"id": "1041",

"ip": "10.0.0.125",

"name": "vpc-agent-centos-public"

},

"decoder": {

"name": "sshd",

"parent": "sshd"

},

"dstuser": "root",

"full_log": "Mar 5 18:26:34 vpc-agent-centos-public sshd[9549]: Failed password for root from 58.218.199.116 port 13982 ssh2",

"location": "/var/log/secure",

"manager": {

"name": "vpc-ossec-manager"

},

"program_name": "sshd",

"rule": {

"description": "Multiple authentication failures.",

"firedtimes": 349,

"frequency": 10,

"groups": [

"syslog",

"attacks",

"authentication_failures"

],

"id": "40111",

"level": 10,

"pci_dss": [

"10.2.4",

"10.2.5"

]

},

"srcip": "58.218.199.116",

"srcport": "13982",

"timestamp": "2017-03-05T10:26:59-0800"

}

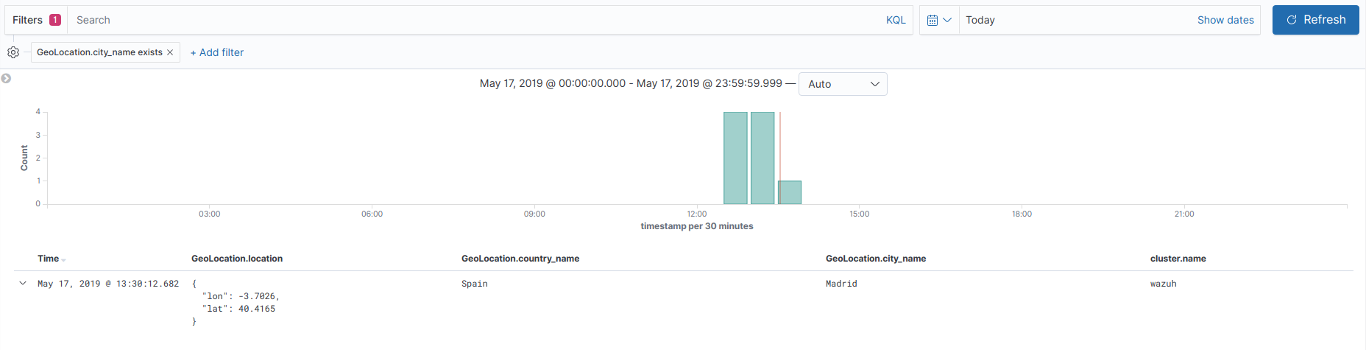

Once generated by the manager, the alerts are sent to the Elastic Stack component where they are enriched with Geolocation information, stored and indexed. Kibana can then be used to search, analyze and visualize the data. See below an alert as displayed in the interface:

Wazuh provides a default ruleset, updated periodically, with over 1,600 rules for different applications. In addition Wazuh allows customizations on its ruleset.

File integrity monitoring

The File integrity monitoring (FIM) component detects and alerts when operating system and application files are modified. This capability is often used to detect access or changes to sensitive data. If your servers are in scope with PCI DSS, the requirement 11.5 states that you must install a file integrity monitoring solution to pass your audit.

Below is an example of an alert generated when a monitored file is changed. Metadata includes MD5 and SHA1 checksums, file sizes (before and after the change), file permissions, file owner, content changes and the user who made these changes (who-data).

{

"timestamp":"2018-07-10T14:05:28.452-0800",

"rule":{

"level":7,

"description":"Integrity checksum changed.",

"id":"550",

"firedtimes":10,

"mail":false,

"groups":[

"ossec",

"syscheck"

],

"pci_dss":[

"11.5"

],

"gpg13":[

"4.11"

],

"gdpr":[

"II_5.1.f"

]

},

"agent":{

"id":"058",

"ip": "10.0.0.121",

"name":"vpc-agent-debian"

},

"manager":{

"name":"vpc-ossec-manager"

},

"id":"1531224328.283446",

"syscheck":{

"path":"/etc/hosts.allow",

"size_before":"421",

"size_after":"433",

"perm_after":"100644",

"uid_after":"0",

"gid_after":"0",

"md5_before":"4b8ee210c257bc59f2b1d4fa0cbbc3da",

"md5_after":"acb2289fba96e77cee0a2c3889b49643",

"sha1_before":"d3452e66d5cfd3bcb5fc79fbcf583e8dec736cfd",

"sha1_after":"b87a0e558ca67073573861b26e3265fa0ab35d20",

"sha256_before":"6504e867b41a6d1b87e225cfafaef3779a3ee9558b2aeae6baa610ec884e2a81",

"sha256_after":"bfa1c0ec3ebfaac71378cb62101135577521eb200c64d6ee8650efe75160978c",

"uname_after":"root",

"gname_after":"root",

"mtime_before":"2018-07-10T14:04:25",

"mtime_after":"2018-07-10T14:05:28",

"inode_after":268234,

"diff":"10a11,12\n> 10.0.12.34\n",

"event":"modified",

"audit":{

"user":{

"id":"0",

"name":"root"

},

"group":{

"id":"0",

"name":"root"

},

"process":{

"id":"82845",

"name":"/bin/nano",

"ppid":"3195"

},

"login_user":{

"id":"1000",

"name":"smith"

},

"effective_user":{

"id":"0",

"name":"root"

}

}

},

"decoder":{

"name":"syscheck_integrity_changed"

},

"location":"syscheck"

}

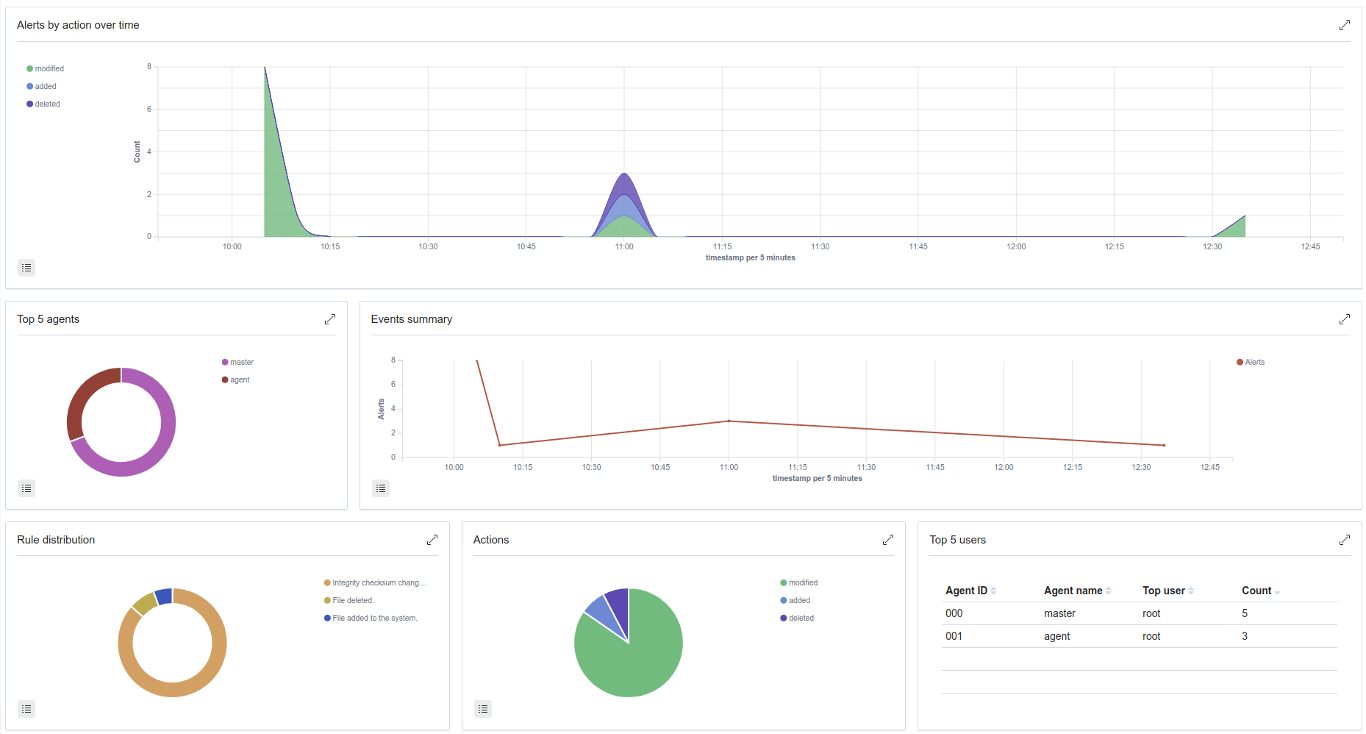

A good summary of file changes can be found in the FIM dashboard which provides drill-down capabilities to view all of the details of the alerts triggered.

More information about how does Wazuh monitor file integrity can be found here.

Rootkits detection

The Wazuh agent periodically scans the monitored system to detect rootkits both at a kernel and user level. This type of malware usually replaces or changes existing operating system components in order to alter the behavior of the system. Rootkits can hide other processes, files or network connections like itself.

Wazuh uses different detection mechanisms to look for system anomalies or well-known intrusions. This is done periodically by the Rootcheck component:

Action |

Detection mechanism |

Binary |

System call |

|---|---|---|---|

Detection of hidden processes |

Comparing output of system binaries and system calls |

ps |

setsid() |

getpgid() |

|||

kill() |

|||

Detection of hidden files |

Comparing output of system binaries and system calls |

ls |

stat() |

opendir() |

|||

readdir() |

|||

Scanning /dev |

ls |

opendir() |

|

Detection of hidden ports |

Comparing output of system binaries and system calls |

netstat |

bind() |

Detection of known rootkits |

Using a malicious file database |

stat() |

|

fopen() |

|||

opendir() |

|||

Inspecting files content using signatures |

fopen() |

||

Detecting file permission and ownership anomalies |

stat() |

||

Below is an example of an alert generated when a hidden process is found. In this case, the affected system is running a Linux kernel-level rootkit (named Diamorphine):

{

"agent": {

"id": "1030",

"ip": "10.0.0.59",

"name": "diamorphine-POC"

},

"decoder": {

"name": "rootcheck"

},

"full_log": "Process '562' hidden from /proc. Possible kernel level rootkit.",

"location": "rootcheck",

"manager": {

"name": "vpc-ossec-manager"

},

"rule": {

"description": "Host-based anomaly detection event (rootcheck).",

"firedtimes": 4,

"groups": [

"ossec",

"rootcheck"

],

"id": "510",

"level": 7

},

"timestamp": "2017-03-05T15:13:04-0800",

"title": "Process '562' hidden from /proc."

}

More information about how does Wazuh detect rootkits can be found here.

Active Response

The Wazuh Active Response capability allows scripted actions to be taken in

response to specific criteria of Wazuh rules being matched. By default, AR

is enabled in all agents and all standard AR commands are defined in ossec.conf

on the Wazuh manager, but no actual criteria for calling the AR commands is

included. No AR commands will actually be triggered until further configuration

is performed on the Wazuh manager.

For the purpose of automated blocking, a very popular command for blocking in Linux is using the iptables firewall, and in Windows the null routing / blackholing, respectively:

<command> <name>firewall-drop</name> <executable>firewall-drop.sh</executable> <expect>srcip</expect> <timeout_allowed>yes</timeout_allowed> </command><command> <name>win_route-null</name> <executable>route-null.cmd</executable> <expect>srcip</expect> <timeout_allowed>yes</timeout_allowed> </command>

Each command has a descriptive <name> by which it will be referred to in the

<active-response> sections. The actual script to be called is defined by

<executable>. The <expect> value specifies what log field (if any)

will be passed along to the script (like srcip or username). Lastly, if

<timeout_allowed> is set to yes, then the command is considered stateful

and can be reversed after an amount of time specified in a specific <active-response>

section (see timeout). For more details

about configuring active response, see the Wazuh user manual. Preconfigured active response scripts can be found here.

Security Configuration Assessment

SCA performs scans in order to discover exposures or misconfigurations in monitored hosts. Those scans assess the configuration of the hosts by means of policy files, that contains rules to be tested against the actual configuration of host. For example, SCA could assess whether it is necessary to change password related configuration, remove unnecessary software, disable unnecessary services, or audit the TCP/IP stack configuration.

Policies for the SCA module are written in YAML format. This that was chosen due having human readability in mind, which allows users to quickly understand and write their own policies or extend the existing ones to fit their needs. Furthermore, Wazuh is distributed with a set of policies, most of them based on the CIS benchmarks, a well-established standard for host hardening.

Here is an example from policy cis_debian9_L2.yml:

- id: 3511

title: "Ensure auditd service is enabled"

description: "Turn on the auditd daemon to record system events."

rationale: "The capturing of system events provides system administrators [...]"

remediation: "Run the following command to enable auditd: # systemctl enable auditd"

compliance:

- cis: ["4.1.2"]

- cis_csc: ["6.2", "6.3"]

condition: all

rules:

- 'c:systemctl is-enabled auditd -> r:^enabled'

After evaluating the aforementioned check, the following event is generated:

{

"type": "check",

"id": 355612303,

"policy": "CIS benchmark for Debian/Linux 9 L2",

"policy_id": "cis_debian9_L2",

"check": {

"id": 3511,

"title": "Ensure auditd service is enabled",

"description": "Turn on the auditd daemon to record system events.",

"rationale": "The capturing of system events provides system administrators [...]",

"remediation": "Run the following command to enable auditd: # systemctl enable auditd",

"compliance": {

"cis": "4.1.2",

"cis_csc": "6.2,6.3"

},

"rules": [

"c:systemctl is-enabled auditd -> r:^enabled"

],

"command": "systemctl is-enabled auditd",

"result": "passed"

}

}

The result is passed because the rule found "enabled" at the beginning of a line in the output of

command systemctl is-enabled auditd. More information about security configuration assessment can be found here.

System inventory

The main purpose of this module is to gather the most relevant information from the monitored system.

Once the agent starts, Syscollector runs periodically scans of defined targets (hardware, OS, packages, etc.), forwarding the new collected data to the manager, which updates the appropriate tables of the database.

The agent's inventory is gathered for different goals. The entire inventory can be found at the inventory tab of the Wazuh APP for each agent, by querying the API to retrieve the data from the DB. Also the Dev tools tab is available, with this feature the API can be directly queried about the different scans being able to filter by any desired field.

In addition, the packages and hotfixes inventory is used as feed for the Vulnerability detection.

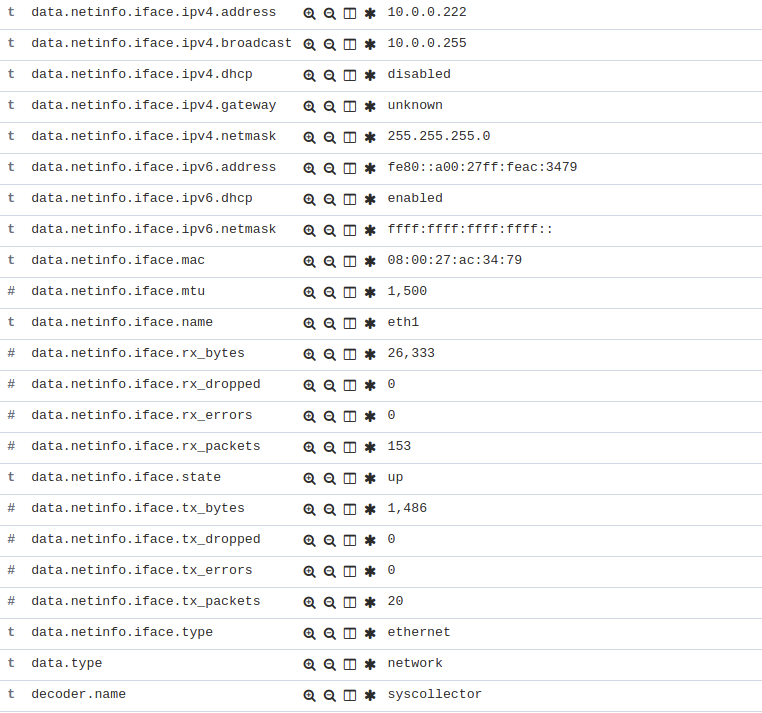

Since Wazuh 3.9 version, Syscollector module information can be used to trigger alerts and show that information in the alerts' description.

To allow this configuration, in a rule declaration set the <decoded_as> field as syscollector.

As an example, this rule will be triggered when the interface eth0 of an agent is enabled and will show what IPv4 has that interface.

<rule id="100001" level="5">

<if_sid>221</if_sid>

<decoded_as>syscollector</decoded_as>

<field name="netinfo.iface.name">eth0</field>

<description>eth0 interface enabled. IP: $(netinfo.iface.ipv4.address)</description>

</rule>

When the alerts are triggered they will be displayed in Kibana this way:

More information about how does system inventory works and its capabilities can be found here.

Vulnerability detection

Wazuh is able to detect vulnerabilities in the applications installed on the agent's host system. The agents send preriodically a list of the installed applications to the manager, where it is stored in a local sqlite database (one per agent). Besides, the manager builds a global vulnerabilities database using public CVE repositories which is used to cross-correlate this information in the agent's application inventory data.

The global vulnerabilities database is created automatically, currently pulling data from the following repositories:

https://canonical.com: Used to pull CVEs for Ubuntu Linux distributions.

https://access.redhat.com: Used to pull CVEs for Red Hat and CentOS Linux distributions.

https://www.debian.org: Used to pull CVEs for Debian Linux distributions.

https://nvd.nist.gov/: Used to pull CVEs from the National Vulnerability Database. Currently is only used to report Windows agents.

The following example shows how to configure the necessary components to run the vulnerability detection process.

To enable the agent module used to collect installed packages on the monitored system has to be added the following block of settings to the agent's configuration file:

<wodle name="syscollector"> <disabled>no</disabled> <interval>1h</interval> <packages>yes</packages> </wodle>

Enable the manager module used to detect vulnerabilities adding a block like like the following:

<vulnerability-detector> <enabled>yes</enabled> <interval>5m</interval> <run_on_start>yes</run_on_start> <provider name="canonical"> <enabled>yes</enabled> <os>bionic</os> <update_interval>1h</update_interval> </provider> </vulnerability-detector>

After restarting the Wazuh manager process, the output should look like this:

** Alert 1571137967.2083: - vulnerability-detector,gdpr_IV_35.7.d,

2019 Oct 15 11:12:47 c31dd66f7e82->vulnerability-detector

Rule: 23503 (level 5) -> 'CVE-2018-5710 on Ubuntu 18.04 LTS (bionic) - low.'

{"vulnerability":{"cve":"CVE-2018-5710","title":"CVE-2018-5710 on Ubuntu 18.04 LTS (bionic) - low.","severity":"Low","published":"2018-01-16T09:29:00Z","state":"Fixed","package":{"name":"libgssapi-krb5-2","version":"1.16-2ubuntu0.1","architecture":"amd64"},"condition":"Package less than 1.16.1-1","reference":"https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-5710"}}

vulnerability.cve: CVE-2018-5710

vulnerability.title: CVE-2018-5710 on Ubuntu 18.04 LTS (bionic) - low.

vulnerability.severity: Low

vulnerability.published: 2018-01-16T09:29:00Z

vulnerability.state: Fixed

vulnerability.package.name: libgssapi-krb5-2

vulnerability.package.version: 1.16-2ubuntu0.1

vulnerability.package.architecture: amd64

vulnerability.package.condition: Package less than 1.16.1-1

vulnerability.reference: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-5710

To learn more about how does the vulnerability detection works, visit the Vulnerability detection section.

Cloud security monitoring

Wazuh helps monitoring Amazon Web Services and Microsoft Azure infrastructures.

Amazon Web Services

Wazuh helps to increase the security of an AWS infrastructure in two different, complementary ways:

Installing the Wazuh agent on the instances to monitor the activity inside them. It collects different types of system and application data and forwards it to the Wazuh manager. Different agent tasks or processes are used to monitor the system in different ways (e.g., monitoring file integrity, reading system log messages and scanning system configurations).

Monitoring AWS services to collect and analyze log data about the infrastructure. Thanks to the module for AWS, Wazuh can trigger alerts based on the events obtained from these services, which provide rich and complete information about the infrastructure, such as the instances configuration, unauthorized behavior, data stored on S3, and more.

The next table contains the most relevant information about configuring each service in ossec.conf:

Service |

Configuration tag |

Type |

Path to logs |

bucket |

cloudtrail |

<bucket_name>/<prefix>/AWSLogs/<account_id>/CloudTrail/<region>/<year>/<month>/<day> |

|

bucket |

vpcflow |

<bucket_name>/<prefix>/AWSLogs/<account_id>/vpcflowlogs/<region>/<year>/<month>/<day> |

|

bucket |

config |

<bucket_name>/<prefix>/AWSLogs/<account_id>/Config/<region>/<year>/<month>/<day> |

|

bucket |

custom |

<bucket_name>/<prefix>/<year>/<month>/<day> |

|

bucket |

custom |

<bucket_name>/<prefix>/<year>/<month>/<day> |

|

bucket |

custom |

<bucket_name>/<prefix>/<year>/<month>/<day> |

|

bucket |

guardduty |

<bucket_name>/<prefix>/<year>/<month>/<day>/<hh> |

|

service |

inspector |

More information about using Wazuh to monitor AWS can be found here.

Microsoft Azure

The Activity Log provides information on subscription level events that have occurred in Azure, with the following relevant information:

Administrative Data: Covers the logging of all creation, update, deletion and action operations performed through the Resource Manager. All actions performed by an user or application using the Resource Manager are interpreted as an operation on a specific resource type. Operations such as write, delete, or action involve logging both the start and the success or failure of that operation in the Administrative category. The Administrative category also includes any changes to the role-based access control of a subscription.

Alert Data: Contains the log of all activations of Azure alerts. For example we will be able to obtain an alert when the percentage of CPU usage of one of the virtual machines of the infrastructure exceeds a certain threshold. Azure provides the option to elaborate customized rules to receive notifications when an event coincides with the rule. When an alert is activated it is logged in the Activity Log.

Security Data: Here we contemplate the log of alerts generated by the Azure Security Center. For example, a log could be related to the execution of suspicious files.

Service HealthData: Covers the log of any service health incident that has occurred in Azure. There are five different types of health events: Action Required, Assisted Recovery, Incident, Maintenance, Information or Security, logged when a subscription resource is affected by the event.

Autoscale Data: Contains the logging of any event related to the autoscale engine based on the autoscale settings in your subscription. Autoscale start events and successful or failed events are logged in this category.

Recomendation Data: Includes Azure Advisor recommendation events.

To monitor the activities of our infrastructure we can use the Azure Log Analytics REST API or we can directly access the content of Azure Storage accounts. This section explains the two ways to proceed, looking at the steps to follow in the Microsoft Azure portal and using the azure-logs module on the Wazuh manager.

Here is an example of rules for alerts generation:

<rule id="87801" level="5">

<decoded_as>json</decoded_as>

<field name="azure_tag">azure-log-analytics</field>

<description>Azure: Log analytics</description>

</rule>

<rule id="87810" level="3">

<if_sid>87801</if_sid>

<field name="Type">AzureActivity</field>

<description>Azure: Log analytics activity</description>

</rule>

<rule id="87811" level="3">

<if_sid>87810</if_sid>

<field name="OperationName">\.+</field>

<description>Azure: Log analytics: $(OperationName)</description>

</rule>

And here is the output:

{

"timestamp": "2020-03-06T09:06:51.432+0000",

"rule": {

"level": 3,

"description": "Azure: Log analytics: Microsoft.Compute/virtualMachines/start/action",

"id": "62723",

"firedtimes": 1,

"mail": false,

"groups": [

"azure"

]

},

"agent": {

"id": "000",

"name": "wazuh-manager-master-0"

},

"manager": {

"name": "wazuh-manager-master-0"

},

"id": "1582685611.529",

"cluster": {

"name": "wazuh",

"node": "wazuh-manager-master-0"

},

"decoder": {

"name": "json"

},

"data": {

"Category": "Administrative",

"ResourceProvider": "Microsoft.Compute",

"TenantId": "d4cd75e6-7i2e-554d-b604-3811e9914fea",

"ActivityStatus": "Started",

"Type": "AzureActivity",

"Authorization": "{\r\n \"action\": \"Microsoft.Compute/virtualMachines/start/action\",\r\n \"scope\": \"/subscriptions/v1153d2d-ugl4-4221-bc88-40365el115gg/resourceGroups/WazuhGroup/providers/Microsoft.Compute/virtualMachines/Logstash\"\r\n}",

"OperationId": "d4elf2e7-65d8-2824-gf44-37742d81c00f",

"azure_tag": "azure-log-analytics",

"ResourceId": "/subscriptions/v1153d2d-ugl4-4221-bc88-40365el115gg/resourceGroups/WazuhGroup/providers/Microsoft.Compute/virtualMachines/Logstash",

"OperationName": "Microsoft.Compute/virtualMachines/start/action",

"CorrelationId": "d4elf2e7-65d8-2824-gf44-37742d81c00f",

"HTTPRequest": "{\r\n \"clientRequestId\": \"dc562c26-c1r2-5fac-94c2-824h208n2024\",\r\n \"clientIpAddress\": \"83.49.98.225\",\r\n \"method\": \"POST\"\r\n}",

"log_analytics_tag": "azure-activity",

"Resource": "Logstash",

"Level": "Informational",

"Caller": "user.email@email.com",

"TimeGenerated": "2018-05-25T15:43:16.52Z",

"ResourceGroup": "WazuhGroup",

"SubscriptionId": "v1153d2d-ugl4-4221-bc88-40365el115gg",

"EventSubmissionTimestamp": "2018-05-25T15:43:36.109Z",

"CallerIpAddress": "83.49.98.225",

"EventDataId": "69db115c-45ds-664b-4275-a684a72b8df2",

"SourceSystem": "Azure"

},

"location": "/azure.json"

}

More information about how to use Wazuh to monitor Microsoft Azure can be found here.

Containers security monitoring

Docker

All the features available in an agent can be useful to monitor Docker server. The Docker wodle collects events on Docker containers such as starting, stopping or pausing.

In order to use the Docker listener module it is only necessary to enable the wodle in the /var/ossec/etc/ossec.conf file of the server running docker, or this can also be done through here. It will start a new thread to listen to Docker events.

<wodle name="docker-listener">

<disabled>no</disabled>

</wodle>

For example, the command docker start apache, which start a container called apache, generates the following alert:

{

"timestamp": "2018-10-05T17:15:33.892+0200",

"rule": {

"level": 3,

"description": "Container apache started",

"id": "87903",

"mail": false,

"groups": [

"docker"

]

},

"agent": {

"id": "002",

"name": "agent001",

"ip": "192.168.122.19"

},

"manager": {

"name": "localhost.localdomain"

},

"id": "1538752533.76076",

"cluster": {

"name": "wazuh",

"node": "master"

},

"full_log": "{\"integration\": \"docker\", \"docker\": {\"status\": \"start\", \"id\": \"018205fa7e170e32578b8487e3b7040aad00b8accedb983bc2ad029238ca3620\", \"from\": \"httpd\", \"Type\": \"container\", \"Action\": \"start\", \"Actor\": {\"ID\": \"018205fa7e170e32578b8487e3b7040aad00b8accedb983bc2ad029238ca3620\", \"Attributes\": {\"image\": \"httpd\", \"name\": \"apache\"}}, \"time\": 1538752533, \"timeNano\": 1538752533877226210}}",

"decoder": {

"name": "json"

},

"data": {

"integration": "docker",

"docker": {

"status": "start",

"id": "018205fa7e170e32578b8487e3b7040aad00b8accedb983bc2ad029238ca3620",

"from": "httpd",

"Type": "container",

"Action": "start",

"Actor": {

"ID": "018205fa7e170e32578b8487e3b7040aad00b8accedb983bc2ad029238ca3620",

"Attributes": {

"image": "httpd",

"name": "apache"

}

},

"time": "1538752533",

"timeNano": "1538752533877226240.000000"

}

},

"location": "Wazuh-Docker"

}

More information about how to use Wazuh for monitoring Docker can be found here.