Amazon Security Lake integration

Note

This document guides you through setting up Wazuh as a data source for AWS Security Lake. To configure Wazuh as a subscriber to Amazon Security Lake, refer to Wazuh as a subscriber.

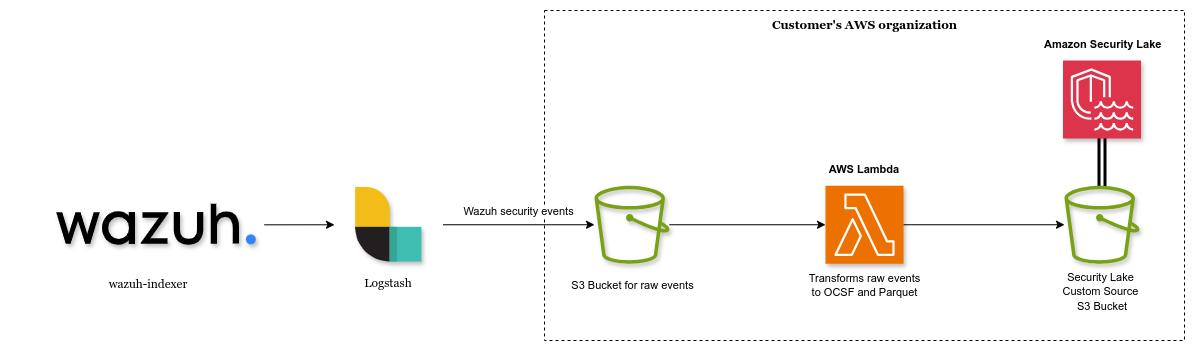

Wazuh Security Events can be converted to OCSF events and Parquet format, required by Amazon Security Lake, by using an AWS Lambda Python function, a Logstash instance and an AWS S3 bucket.

A properly configured Logstash instance can send the Wazuh Security events to an AWS S3 bucket, automatically invoking the AWS Lambda function that will transform and send the events to the Amazon Security lake dedicated S3 bucket.

The diagram below illustrates the process of converting Wazuh Security Events to OCSF events and to Parquet format for Amazon Security Lake.

Prerequisites

Amazon Security Lake is enabled.

At least one up and running Wazuh Indexer instance with populated

wazuh-alerts-4.x-*indices.A Logstash instance.

An S3 bucket to store raw events.

An AWS Lambda function, using the Python 3.12 runtime.

(Optional) An S3 bucket to store OCSF events, mapped from raw events.

AWS configuration

Enabling Amazon Security Lake

If you haven't already, ensure that you have enabled Amazon Security Lake by following the instructions at Getting started - Amazon Security Lake.

For multiple AWS accounts, we strongly encourage you to use AWS Organizations and set up Amazon Security Lake at the Organization level.

Creating an S3 bucket to store events

Follow the official documentation to create an S3 bucket within your organization. Use a descriptive name, for example: wazuh-aws-security-lake-raw.

Creating a Custom Source in Amazon Security Lake

Configure a custom source for Amazon Security Lake via the AWS console. Follow the official documentation to register Wazuh as a custom source.

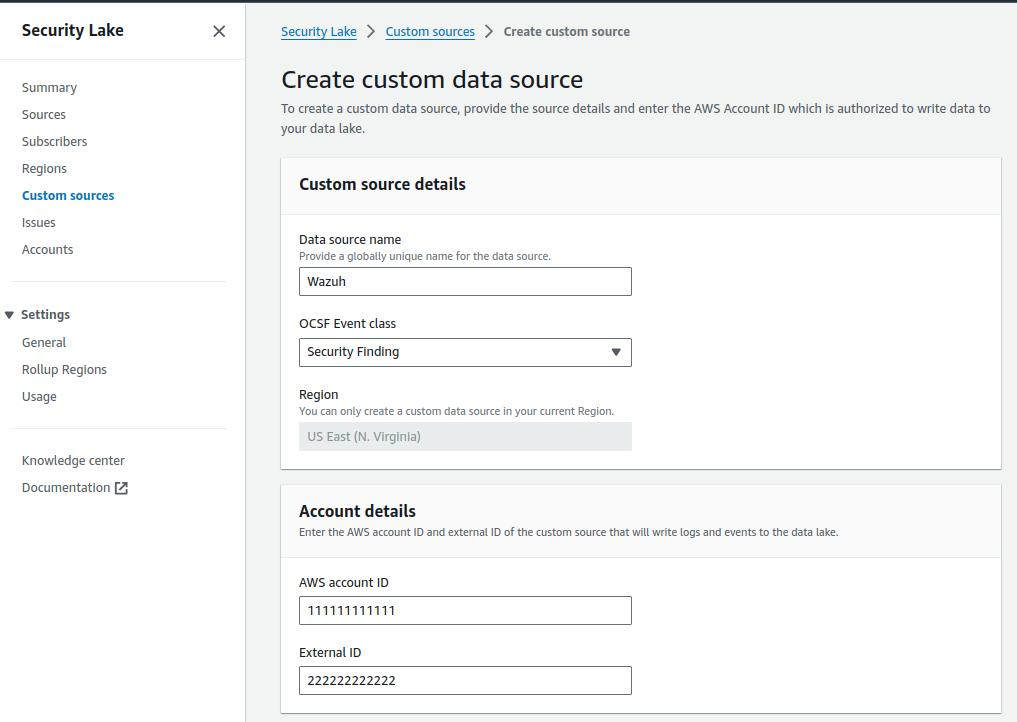

To create the custom source:

Log into your AWS console and navigate to Security Lake.

Navigate to Custom Sources, and click Create custom source.

Enter a descriptive name for your custom source. For example,

wazuh.Choose Security Finding as the OCSF Event class.

For AWS account with permission to write data, enter the AWS account ID and External ID of the custom source that will write logs and events to the data lake.

For Service Access, create and use a new service role or use an existing service role that gives Security Lake permission to invoke AWS Glue.

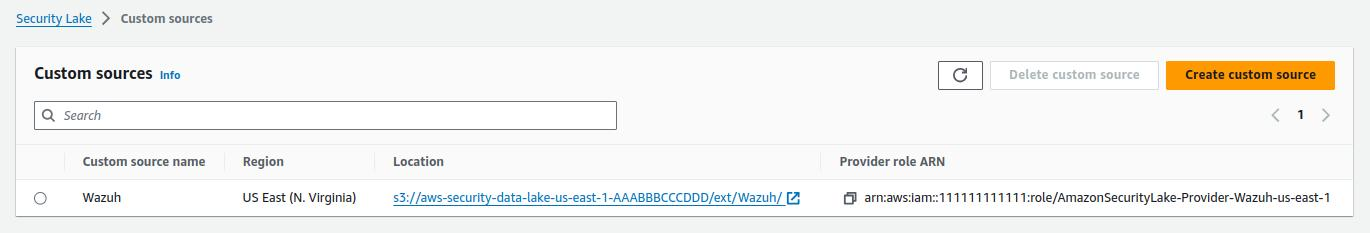

Click on Create. Upon creation, Amazon Security Lake automatically creates an AWS Service Role with permissions to push files into the Security Lake bucket, under the proper prefix named after the custom source name. An AWS Glue Crawler is also created to populate the AWS Glue Data Catalog automatically.

Finally, collect the S3 bucket details, as these will be needed in the next step. Make sure you have the following information:

The Amazon Security Lake S3 region.

The S3 bucket name (e.g,

aws-security-data-lake-us-east-1-AAABBBCCCDDD).

Creating an AWS Lambda function

Follow the official documentation to create an AWS Lambda function:

Select Python 3.12 as the runtime.

Configure the Lambda to use 512 MB of memory and 30 seconds timeout.

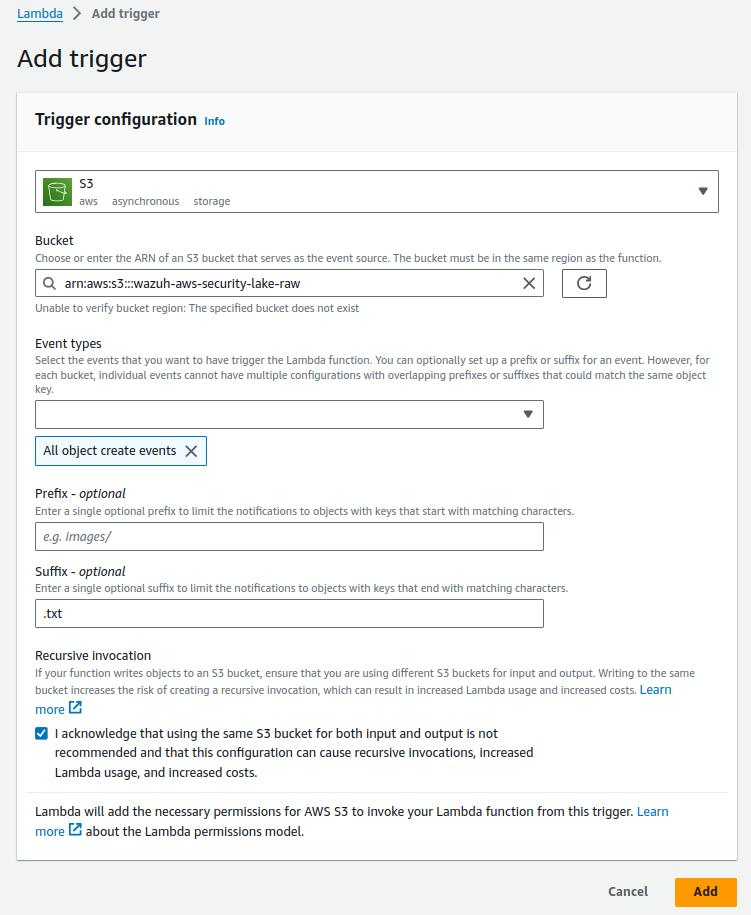

Configure a trigger so every object with

.txtextension uploaded to the S3 bucket created previously invokes the Lambda function.

Create a zip deployment package and upload it to the S3 bucket created previously as per these instructions. The code is hosted in the Wazuh Indexer repository. Use the Makefile to generate the zip package wazuh_to_amazon_security_lake.zip.

$ git clone https://github.com/wazuh/wazuh-indexer.git && cd wazuh-indexer $ git checkout v4.14.3 $ cd integrations/amazon-security-lake $ make

Configure the Lambda with these environment variables.

Environment variable

Required

Value

AWS_BUCKET

True

The name of the Amazon S3 bucket in which Security Lake stores your custom source data

SOURCE_LOCATION

True

The Data source name of the Custom Source

ACCOUNT_ID

True

Enter the ID that you specified when creating your Amazon Security Lake custom source

REGION

True

AWS Region to which the data is written

S3_BUCKET_OCSF

False

S3 bucket to which the mapped events are written

OCSF_CLASS

False

The OCSF class to map the events into. Can be

SECURITY_FINDING(default) orDETECTION_FINDING.Note

The

DETECTION_FINDINGclass is not supported by Amazon Security Lake yet.

Validation

To validate that the Lambda function is properly configured and works as expected, create a test file with the following command.

$ touch "$(date +'%Y%m%d')_ls.s3.wazuh-test-events.$(date +'%Y-%m-%dT%H.%M').part00.txt"

Add the sample events below to the file and upload it to the S3 bucket.

{"cluster":{"name":"wazuh-cluster","node":"wazuh-manager"},"timestamp":"2024-04-22T14:20:46.976+0000","rule":{"mail":false,"gdpr":["IV_30.1.g"],"groups":["audit","audit_command"],"level":3,"firedtimes":1,"id":"80791","description":"Audit: Command: /usr/sbin/crond"},"location":"","agent":{"id":"004","ip":"47.204.15.21","name":"Ubuntu"},"data":{"audit":{"type":"NORMAL","file":{"name":"/etc/sample/file"},"success":"yes","command":"cron","exe":"/usr/sbin/crond","cwd":"/home/wazuh"}},"predecoder":{},"manager":{"name":"wazuh-manager"},"id":"1580123327.49031","decoder":{},"@version":"1","@timestamp":"2024-04-22T14:20:46.976Z"}

{"cluster":{"name":"wazuh-cluster","node":"wazuh-manager"},"timestamp":"2024-04-22T14:22:03.034+0000","rule":{"mail":false,"gdpr":["IV_30.1.g"],"groups":["audit","audit_command"],"level":3,"firedtimes":1,"id":"80790","description":"Audit: Command: /usr/sbin/bash"},"location":"","agent":{"id":"007","ip":"24.273.97.14","name":"Debian"},"data":{"audit":{"type":"PATH","file":{"name":"/bin/bash"},"success":"yes","command":"bash","exe":"/usr/sbin/bash","cwd":"/home/wazuh"}},"predecoder":{},"manager":{"name":"wazuh-manager"},"id":"1580123327.49031","decoder":{},"@version":"1","@timestamp":"2024-04-22T14:22:03.034Z"}

{"cluster":{"name":"wazuh-cluster","node":"wazuh-manager"},"timestamp":"2024-04-22T14:22:08.087+0000","rule":{"id":"1740","mail":false,"description":"Sample alert 1","groups":["ciscat"],"level":9},"location":"","agent":{"id":"006","ip":"207.45.34.78","name":"Windows"},"data":{"cis":{"rule_title":"CIS-CAT 5","timestamp":"2024-04-22T14:22:08.087+0000","benchmark":"CIS Ubuntu Linux 16.04 LTS Benchmark","result":"notchecked","pass":52,"fail":0,"group":"Access, Authentication and Authorization","unknown":61,"score":79,"notchecked":1,"@timestamp":"2024-04-22T14:22:08.087+0000"}},"predecoder":{},"manager":{"name":"wazuh-manager"},"id":"1580123327.49031","decoder":{},"@version":"1","@timestamp":"2024-04-22T14:22:08.087Z"}

A successful execution of the Lambda function will map these events into the OCSF Security Finding Class and write them to the Amazon Security Lake S3 bucket in Parquet format, properly partitioned based on the Custom Source name, Account ID, AWS Region and date, as described in the official documentation.

Installing and configuring Logstash

Install Logstash on a dedicated server or on the server hosting the Wazuh Indexer. Logstash forwards the data from the Wazuh Indexer to the AWS S3 bucket created previously.

Follow the official documentation to install Logstash.

Install the logstash-input-opensearch plugin (this one is installed by default in most cases).

$ sudo /usr/share/logstash/bin/logstash-plugin install logstash-input-opensearch

Copy the Wazuh Indexer root certificate on the Logstash server, to any folder of your choice (e.g,

/usr/share/logstash/root-ca.pem).Grant the

logstashuser the required permission to access certificates, log directories, data directories, and configuration files.$ sudo chmod 755 </PATH/TO/LOGSTASH_CERTS>/root-ca.pem $ sudo chown -R logstash:logstash /var/log/logstash $ sudo chmod -R 755 /var/log/logstash $ sudo chown -R logstash:logstash /var/lib/logstash $ sudo chown -R logstash:logstash /etc/logstash

Configuring the Logstash pipeline

A Logstash pipeline allows Logstash to use plugins to read the data from the Wazuh Indexer and send them to an AWS S3 bucket.

The Logstash pipeline requires access to the following secrets:

Wazuh Indexer credentials:

INDEXER_USERNAMEandINDEXER_PASSWORD.AWS credentials for the account with permissions to write to the S3 bucket:

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY.AWS S3 bucket details:

AWS_REGIONandS3_BUCKET(the S3 bucket name for raw events).

Use the Logstash keystore to securely store these values.

Create the configuration file

indexer-to-s3.confin the/etc/logstash/conf.d/folder:$ sudo touch /etc/logstash/conf.d/indexer-to-s3.conf

Add the following configuration to the

indexer-to-s3.conffile.input { opensearch { hosts => ["<WAZUH_INDEXER_ADDRESS>:9200"] user => "${INDEXER_USERNAME}" password => "${INDEXER_PASSWORD}" ssl => true ca_file => "</PATH/TO/LOGSTASH_CERTS>/root-ca.pem" index => "wazuh-alerts-4.x-*" query => '{ "query": { "range": { "@timestamp": { "gt": "now-5m" } } } }' schedule => "*/5 * * * *" } } output { stdout { id => "output.stdout" codec => json_lines } s3 { id => "output.s3" access_key_id => "${AWS_ACCESS_KEY_ID}" secret_access_key => "${AWS_SECRET_ACCESS_KEY}" region => "${AWS_REGION}" bucket => "${S3_BUCKET}" codec => "json_lines" retry_count => 0 validate_credentials_on_root_bucket => false prefix => "%{+YYYY}%{+MM}%{+dd}" server_side_encryption => true server_side_encryption_algorithm => "AES256" additional_settings => { "force_path_style" => true } time_file => 5 } }

Running Logstash

Run Logstash from the CLI with your configuration:

Logstash 8.x and earlier versions:

$ sudo systemctl stop logstash $ sudo -E /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/indexer-to-s3.conf --path.settings /etc/logstash --config.test_and_exit

Logstash 9.x and later versions:

$ sudo systemctl stop logstash $ sudo -u logstash /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/indexer-to-s3.conf --path.settings /etc/logstash --config.test_and_exit

After confirming that the configuration loads correctly without errors, run Logstash as a service.

$ sudo systemctl enable logstash $ sudo systemctl start logstash

OCSF Mapping

The integration maps Wazuh Security Events to the OCSF v1.1.0 Security Finding (2001) Class.

The tables below represent how the Wazuh Security Events are mapped into the OCSF Security Finding Class.

Note

This does not reflect any transformations or evaluations of the data. Some data evaluation and transformation will be necessary for a correct representation in OCSF that matches all requirements.

Metadata

OCSF Key |

OCSF Value Type |

Value |

category_uid |

Integer |

2 |

category_name |

String |

"Findings" |

class_uid |

Integer |

2001 |

class_name |

String |

"Security Finding" |

type_uid |

Long |

200101 |

metadata.product.name |

String |

"Wazuh" |

metadata.product.vendor_name |

String |

"Wazuh, Inc." |

metadata.product.version |

String |

"4.9.0" |

metadata.product.lang |

String |

"en" |

metadata.log_name |

String |

"Security events" |

metadata.log_provider |

String |

"Wazuh" |

Security events

OCSF Key |

OCSF Value Type |

Wazuh Event Value |

activity_id |

Integer |

1 |

time |

Timestamp |

timestamp |

message |

String |

rule.description |

count |

Integer |

rule.firedtimes |

finding.uid |

String |

id |

finding.title |

String |

rule.description |

finding.types |

String Array |

input.type |

analytic.category |

String |

rule.groups |

analytic.name |

String |

decoder.name |

analytic.type |

String |

"Rule" |

analytic.type_id |

Integer |

1 |

analytic.uid |

String |

rule.id |

risk_score |

Integer |

rule.level |

attacks.tactic.name |

String |

rule.mitre.tactic |

attacks.technique.name |

String |

rule.mitre.technique |

attacks.technique.uid |

String |

rule.mitre.id |

attacks.version |

String |

"v13.1" |

nist |

String Array |

rule.nist_800_53 |

severity_id |

Integer |

convert(rule.level) |

status_id |

Integer |

99 |

resources.name |

String |

agent.name |

resources.uid |

String |

agent.id |

data_sources |

String Array |

['_index', 'location', 'manager.name'] |

raw_data |

String |

full_log |

Troubleshooting

Issue |

Resolution |

The Wazuh alert data is available in the Amazon Security Lake S3 bucket, but the Glue Crawler fails to parse the data into the Security Lake. |

This issue typically occurs when the custom source that is created for the integration is using the wrong event class. Make sure you create the custom source with the Security Finding event class. |

The Wazuh alerts data is available in the Auxiliar S3 bucket, but the Lambda function does not trigger or fails. |

This usually happens if the Lambda is not properly configured, or if the data is not in the correct format. Test the Lambda following this guide. |

Logstash fails to start with the message: |

The logstash user does not have permission to write to one or more required directories. Grant the necessary permissions: sudo chown -R logstash:logstash /var/log/logstash

sudo chmod -R 755 /var/log/logstash

sudo chown -R logstash:logstash /var/lib/logstash

sudo chown -R logstash:logstash /etc/logstash

|

Logstash 9.0+ fails with: |

Starting from Logstash 9.0, running as the root user is blocked for security reasons. Run Logstash using the logstash system account: sudo -u logstash /usr/share/logstash/bin/logstash \

-f /etc/logstash/conf.d/indexer-to-s3.conf \

--path.settings /etc/logstash --config.test_and_exit

|